[Want to learn about Polars? On Sunday, I'll be teaching my first-ever course on Polars. You can buy the course on its own at https://store.lerner.co.il/polars, or get it as part of my Python+data membership at https://LernerPython.com. The membership includes my entire catalog of courses, as well as twice-monthly office hours, frequent members-only lectures, a private forum where you can ask Python+Pandas questions, and a paid membership to Bamboo Weekly. Any questions? Just e-mail me at reuven@lerner.co.il.]

This week, we looked at inflation. Specifically, we wanted to know whether it's still bad, and whether it's equally bad in all countries. There's all sorts of talk about the Federal Reserve reducing interest rates when it next meets, and there is talk about the European Central Bank taking a similar step next month. If true, that would mean inflation has subsided somewhat, and that central banks can try to (gently) push the economy toward greater expansion.

Normally, I ask you to use Pandas to analyze our data. But the Polars library (https://pola.rs/) continues to draw interest and mindshare from Pandas users. That's partly because of its speed, partly because of its very clean API, and partly because it can handle larger data sets via lazy execution. Even if you won't use Polars, it's important to know how it works and how to use it, because its design will certainly come back to influence Pandas and other data-analysis libraries.

Data and six questions

This week's data come from the OECD (Organisation for Economic Co-operation and Development, https://www.oecd.org/), which the Economist calls a "club of mostly-rich countries."

I downloaded the inflation data from the OECD's data explorer:

https://data-explorer.oecd.org/vis?tm=inflation&pg=0&snb=50&df[ds]=dsDisseminateFinalDMZ&df[id]=DSD_G20_PRICES%40DF_G20_PRICES&df[ag]=OECD.SDD.TPS&df[vs]=1.0&dq=.M...PA...&lom=LASTNPERIODS&lo=13&to[TIME_PERIOD]=falseYou can view and play with the data there, in your browser. But you can also download the data in CSV format, either by clicking on the "download" button, or by using this URL:

https://sdmx.oecd.org/public/rest/data/OECD.SDD.TPS,DSD_G20_PRICES@DF_G20_PRICES,1.0/all?dimensionAtObservation=AllDimensions&format=csvfilewithlabels

This week, I gave you six questions and tasks to answer with Polars. Here are my detailed solutions; as usual, a link to my downloadable Jupyter notebook is at the end of this post.

Create a data frame from the CSV file. Keep only those columns containing ALL CAPS.

Before we can do anything else, we'll need to load up Polars. You can install it via pip:

pip install polarsTwo quick caveats, though:

- I use a Mac, and installed Python using pyenv. The version that I installed apparently runs in Intel compatibility mode, which resulted in errors when I tried to

import polars. I installed a variant of Polars,polars-lts-cpu, and the errors went away. - Because I'm constantly experimenting with packages, I installed all of the dependencies along with Polars. To do that, I used

pip install 'polars-lts-cpu[all]', combining the fixes that I needed with a bunch of other packages.

Once you have Polars installed, you can import it. Just as Pandas as a standard alias of "pd", Polars has a standard alias of "pl":

import polars as plWith that in place, we can then work with the CSV file that I downloaded from the OECD.

filename = 'OECD.SDD.TPS,DSD_G20_PRICES@DF_G20_PRICES,1.0+all.csv'

df = pl.read_csv(filename)At this point, we now have a data frame, one which looks and feels much like a Pandas data frame. But there are differences, the biggest one being the lack of an index. Polars data frames have no index; instead, you use any column, along with an expression, to select rows.

However, the data frame does have columns, and each column has a unique name. Looking at our Polars data frame, we also see the dtype for each column at the top. (You can also run df.dtypes to get the full list of dtypes.) In this data frame, much of the data is repeated, with ALL_CAPS columns containing shorter forms, and Long_names containing longer forms.

I asked you to keep only those columns with ALL_CAPS names. As in Pandas, we can pass a data frame a list of column names inside of [] to get back a new data frame with a subset of the original columns. So in theory, I could run df.columns, getting the column names, edit that list by hand to remove mixed-case names, and then pass the resulting list to df.

But there's an easier way, namely a list comprehension: I can pass it df.columns, and then only get back values where the column name is the same as the name after running via str.upper. I can then pass the resulting list to df, and assign it back to df:

df = df[[one_column_name

for one_column_name in df.columns

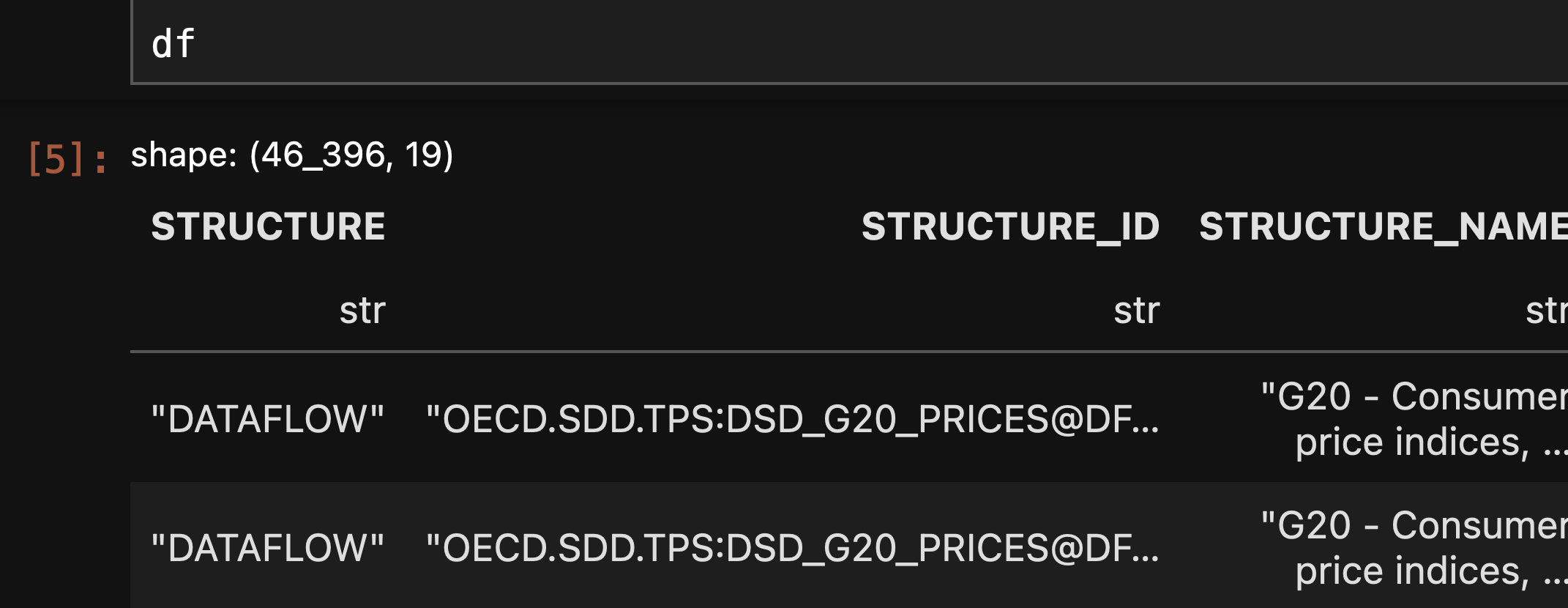

if one_column_name == one_column_name.upper()]]The result is a data frame with 46,396 rows and 19 columns. You can actually see a data frame's shape whenever it's displayed on the screen:

Remove rows in which the location is EA20, EU27_2020, or G20.

We're interested in per-country inflation, but the OECD data includes statistics about a number of country groupings – the EA20 (the 20 "Eurozone" countries that use the euro as their currency), EU27_2020 (the 27 members of the European Union, following the United Kingdom's exit in 2020), and the G20 (19 countries, the European Union, and the African Union). Those are certainly interesting and important, but I asked you to remove them from the data frame.

To select rows in Polars, we create an expression describing what we want to see, and then hand it to the filter method. But how do we create such an expression?

Assuming that it'll have to do with a column – and that's often going to be the case – we can select it by running the pl.col function. (This is technically a class, and every time we invoke pl.col, we're getting an instance of the Col class. But if they call it a function, then I feel OK doing so, too.) We pass pl.col the name of the column we want to use as a string; in this case, that'll be REF_AREA.

But then what? How can we indicate that we want rows that aren't equal to any of these three values?

One way would be to create three pl.col expressions, and & them together inside our call to pl.filter:

(

df

.filter(

(pl.col('REF_AREA') != 'EA20') &

(pl.col('REF_AREA') != 'EU27_2020') &

(pl.col('REF_AREA') != 'G20')

)

)Here, we use Python's inequality operator != three times, once for each of the string. These three expressions are combined and handed to pl.filter, and the result is a data frame in which all of the REF_AREA values represent countries, rather than groups of countries.

There's nothing wrong, per se, with filtering like this. But perhaps there's a way to tell Polars that we don't want rows with any of these three strings in REF_AREA?

Indeed there is, the is_in method. We can pass is_in a list of values, and the expression will return True if the column contains one of these values:

df = (

df

.filter(

(pl.col("REF_AREA").is_in(['EA20', 'EU27_2020', 'G20']))

)

)

This is great, except that it's the opposite of what we want: We actually want to remove such rows, not keep them.

To flip the logic of our expression, we use the ~ operator. NumPy and Pandas both use this operator in the same way, turning True into False and vice versa. We can thus say:

df = (

df

.filter(

(~pl.col("REF_AREA").is_in(['EA20', 'EU27_2020', 'G20']))

)

)

The result is a data frame in which REF_AREA doesn't have these three groupings, but does have all of the country information.